2 Key Lessons From Facebook’s Video Views Metrics Fiasco

People have short term memory (or selective memory), when they can’t remember things they will resort to how they think something should be. Recently Facebook was in the hot seat because of this very reason.

Facebook metrics definition issue

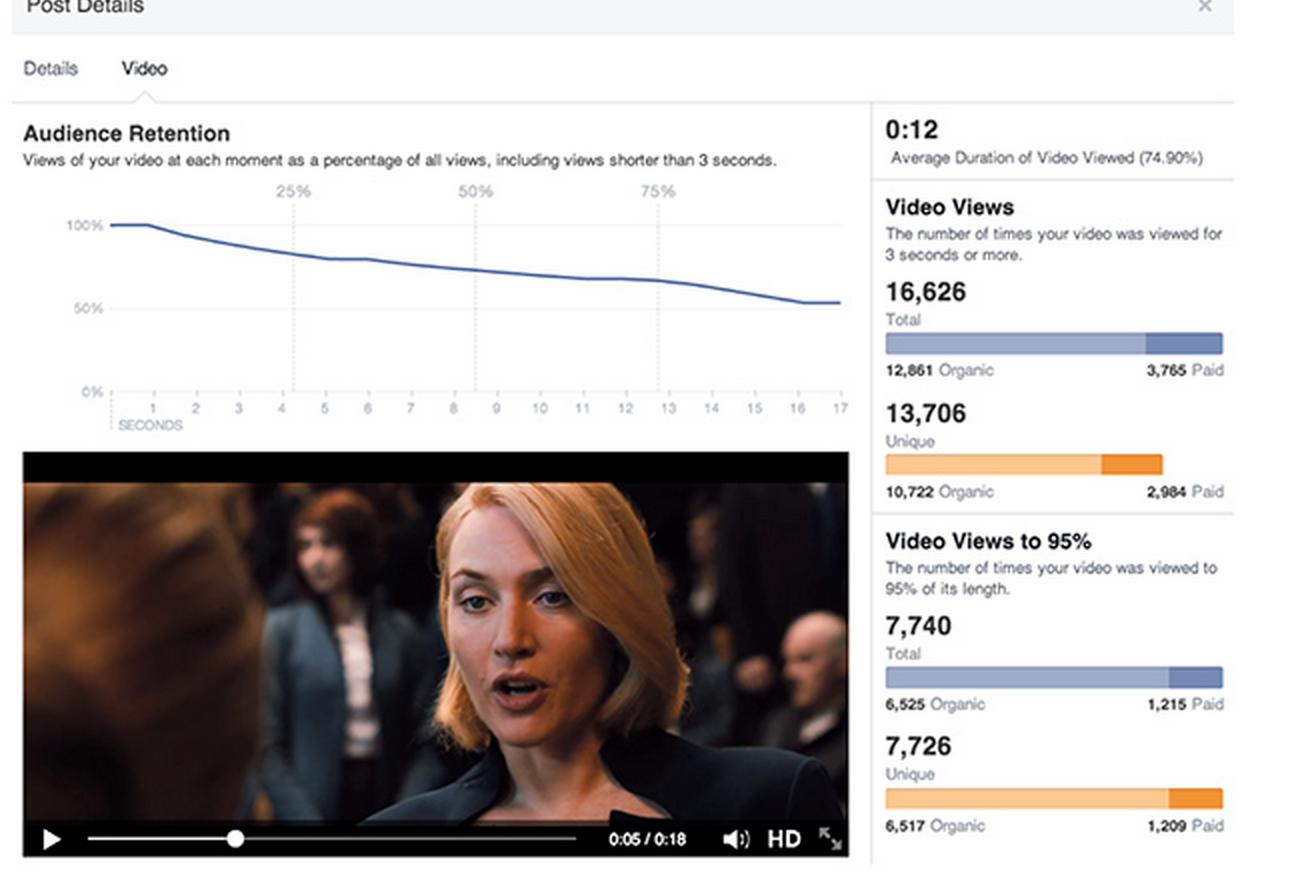

Facebook has a metrics called “Video View” for video ads. In this metric they only counted the video as viewed if it was watched more than 3 seconds by the viewer. In other words, if someone watches a video for 2 seconds then that video view won’t be counted as a view in this metric.

Facebook also has another metrics, called “Average duration of Video views”, the “standard” definition of it should be Total Time spent watching video divided by Total Viewers. However, that’s not how Facebook defined it. In Sept Wall Street Journal reported that Facebook “vastly overestimated average viewing time for video ads on its platform for two years.” This lead to an apology from Facebook

About a month ago, we found an error in the way we calculate one of the video metrics on our dashboard – average duration of video viewed. The metric should have reflected the total time spent watching a video divided by the total number of people who played the video. But it didn’t – it reflected the total time spent watching a video divided by only the number of “views” of a video (that is, when the video was watched for three or more seconds). And so the miscalculation overstated this metric. While this is only one of the many metrics marketers look at, we take any mistake seriously.

As per DM News article, Facebook did state the definition when it rolled out this metric two years ago. So it was not actually doing anything wrong. It was a case of short term memory issue.

“The problem, as critics put it, is a problem of omission. While Facebook very clearly states that it’s only counting views as any video-play event that lasts longer than three seconds, it does not go out of its way to explicitly beat readers over the head with the fact that this definition of a “video view” applies equally to the calculation of average duration of video views.”

If Facebook product team had read my posts from 2012 on “Creating a culture of analytics” then they might have likely avoided this “scandal”. The two issues that Facebook dealt with were the exact same ones I talked about in my posts. To recap, here are the gist of those two posts:

- Make sure everybody understands clearly how metrics are defined. I talked about this in my post in 2012, Standard Definitions of Metrics: Creating a Culture of Analytics. In that post I said

Lack of standard definitions for the metrics causes people to report different numbers for supposedly same metrics, leading to confusion and total lack of trust in data. No trust in data means that nobody is going to use the data to make strategic decisions and there goes all your efforts to create a culture of Analytics.

Having standard definitions is not as easy as it sounds. It starts from you and your team having a clear understanding on how to calculate various metrics. Some seemingly simple metrics can be calculated in various different ways and all of those ways might be right but getting one standard way of calculating those removes any confusion and gets everybody on the same page.

- People have short term memory. In my 2012 post, titled Dealing with Short-Term Memory: Creating a Culture of Analytics, I wrote:We all make assumptions from time to time; sometime we state them clearly and sometimes we just assume in our own head. We then operate under those assumptions. In context of Analytics, one such assumption is that everybody knows what the goals and KPIs are. We might have defined them on the onset of the program, campaign, beginning of month, quarter, year etc., but once those are defined we start to assume that everybody knows about them and is operating keeping those goals in mind.

Well the truth is that people have short term memory. They do forget and then start to interpret the KPIs, defined based on those goals, in their own way. As the Analytics head/analyst/manager, it is your job to constantly remind stakeholders of the goals and KPIs.

Two Lessons

This fiasco provides two great lesson for all the Digital Analytics teams.

- Clearly define your metrics and make sure the underlying metrics and calculations are clear in your definition.

- Don’t make any assumptions, people have short term memory. Just because you stated a definition of a KPI in past does not mean everybody will remember it and know how tit was calculated. It is your job to make sure anybody using your metrics/KPI can get to the definition and calculations right away.

Recent Comments